NativeSensors

@NativeSensorsNativeSensors

Hello all!

As an open-source organization, we are committed to keeping NativeSensors and EyeGestures free and accessible for everyone. We are extremely thankful for all the donations we have received!

NativeSensors

A.K.A. What We Have Been Working On

- Working on Web Interfaces for v2 Engine:

We are adjusting v2 so it can serve multiple clients and collaborate with the frontend without using too many resources. The latest working version is available on the develop branch here:

https://github.com/NativeSensors/WebGesturesSDK

We are getting close to deploying v2 on our actual server, so stay tuned!

- Fixes and Changes to EyePather:

NativeSensors

Hey everyone!

Exciting news – Engine v2 is going to the web!

We're planning to develop it as a web browser extension. For now, you can check out our repository for reference:

https://github.com/NativeSensors/WebGesturesSDK

This repository contains the code that will help you build your own web applications using our SDK.

Feel free to explore and experiment with it! If you have any questions, don't hesitate to email us.

Best regards, The EyeGestures Team

NativeSensors

Hey everyone,

We are curious about how your gaze tracking or eye tracking projects are progressing.

- Are you using hardware or software for gaze tracking?

- Are you building interfaces or research software?

- What is the biggest issue you are facing with your current project?

If you can answer any of these questions, or if you have anything else to share with us, please email us at:

Best regards,

The NativeSensors Team

NativeSensors

Hey all,

We have updated EyePilot to version 0.0.3alpha! Now:

- It uses the latest EyeGestures engine.

- The CN is set to 2 by default instead of 5 (this impacts the Classic Algorithm's output). You can still change it with the settings slider.

- A new calibration map is used.

Check it out in our product section!

Future:

- We need to work on button pressing and blink controllability.

- The cursor works fine in coarse setup, but we need to work on upgrading precise controllability.

NativeSensors

Hey all,

There is new minor update to EyeGestures.

Now you can customize your calibration points/map to fit your solutions. Simple copy snippet below, and place your calibration poitns on x,y planes from 0.0 to 1.0. It will be then automatically scaled to your display.

Give it a try!

NativeSensors

Hey everyone,

I've made some updates to EyePather to enhance usability. Feel free to download the latest version! https://polar.sh/NativeSensors/products/cbf72fe2-125b-4ded-bb8a-b3d2f1da0f4b

Changes:

NativeSensors

Everyone, we want to introduce you to an affordable gaze tracker that allows for gaze data collection.

It is designed as a simple tool for students and small research groups who may not have the option to use more robust but more expensive solutions.

NativeSensors

We Have Been Through a Lot!

EyeGestures has significantly evolved from a small, silly experiment running on one laptop to the second generation of an open-source gaze tracking engine that can start competing with commercial solutions, although it may still be on the losing side.

With a working technology stack that can solve problems, it is the perfect moment to catch our breath and scope the project a bit.

Roadmap

NativeSensors

Working with EyeGestures engines can be complex, so I decided to write this post to clarify the process.

This guide will walk you through the necessary components, using the example provided in examples/simple_example_v2.py.

Imports and Main Object + Helper

First, let's focus on the essential imports. From the EyeGestures library, EyeGestures_v2 is the core object that contains the primary algorithm.

NativeSensors

Hey all,

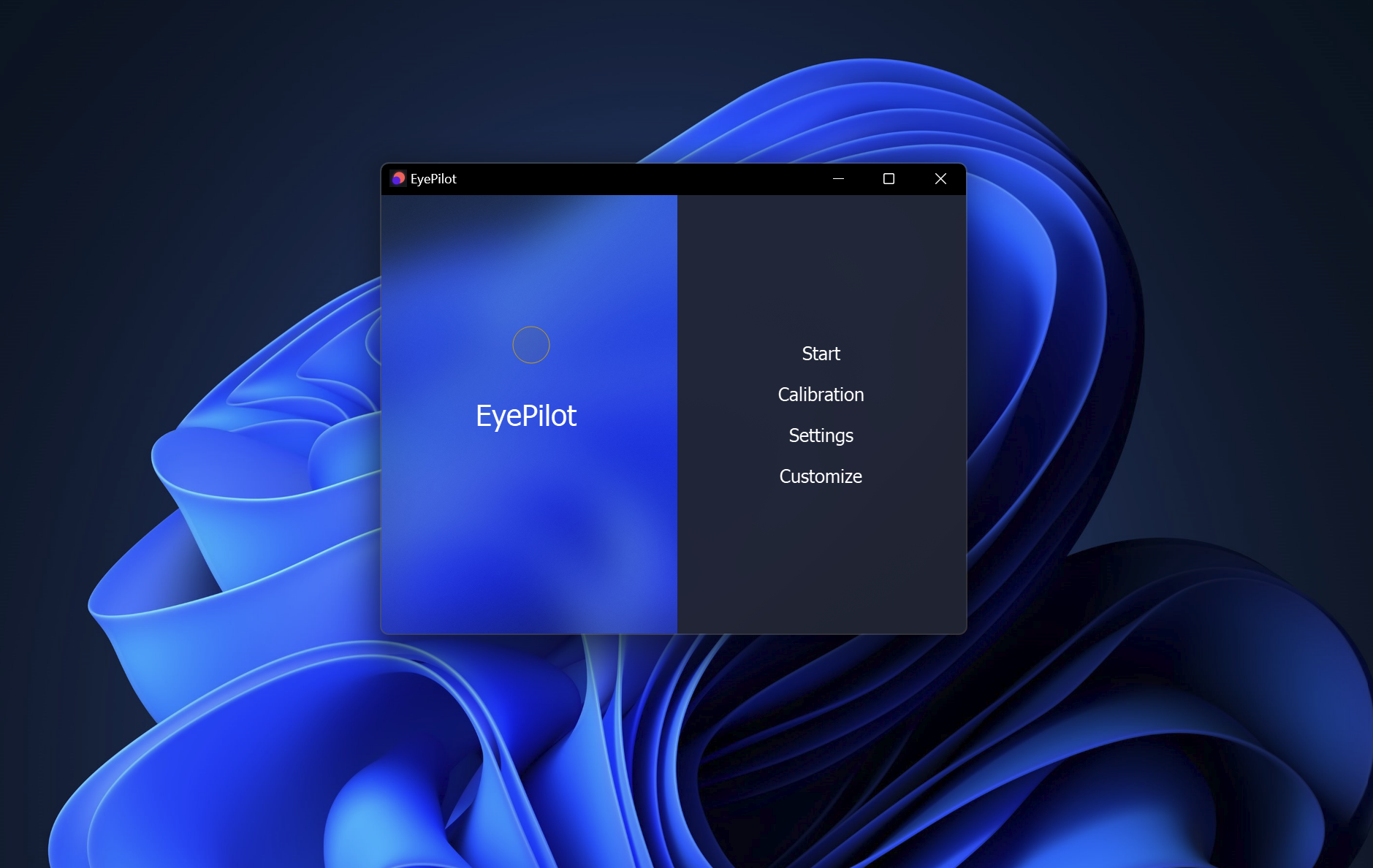

EyePilot is released!

Together with the release of EyePilot alpha to all subscribers, we are providing this tutorial to explain the particular components of the interface, which may not be fully self-explanatory.

Find download link below:

Here goes our WeTransfer link: