Let's write a templating library 🔎 Part 1: lexing

eval

...and learn some Clojure in the process! 👽

Tags: #Clojure

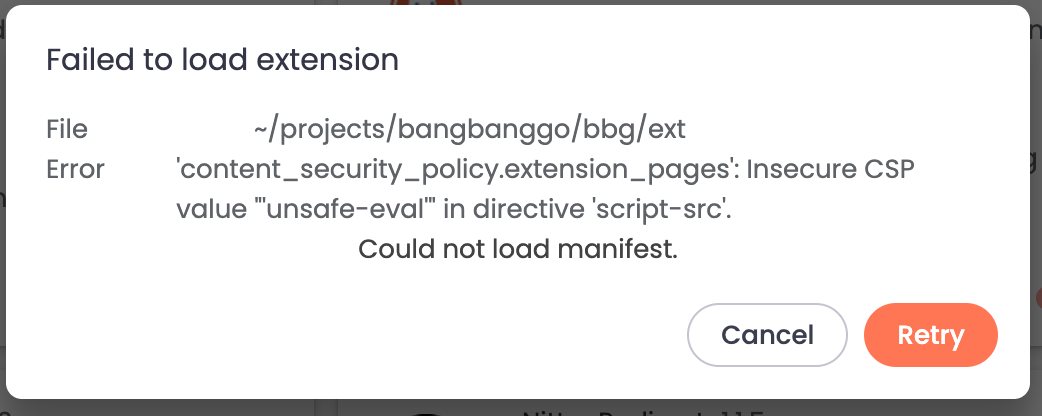

As I was recently preparing a chrome extension for release, I found that the templating library, of all code, was causing problems. Turned out that nunjucks, the templating library in question, uses eval under the hood for performance reasons (as do most other templating libraries). To limit the risk of malicious code being executed near very powerful browser API's, modern extensions can simply no longer rely on eval and eval-like constructs.

Great policy...ánd cumbersome!

---

eval-ing...

There were multiple workarounds possible - all which became less appealing the more I read in to them. All in all it would be quite some effort for a templating library that was way too powerful for my application to begin with.

So the thought I had been suppressing until then, finally emerged: How hard could it really be to write your own templating library? 🥹

In this series we'll take a stab at implementing our own templating library using Clojure, a functional language that uses immutable data structures - a great fit for this problem.

Don't miss out on Part 2

what we're after

Short overview of what sort of features we need from our templating library:

;; variables

(tpl/render "Hello, {{planet}}!" {:planet "Mars"})

;; => "Hello, Mars!"

;; filters with optional arguments

(tpl/render "Hello, {{mention|lowercase|subs:1}}!" {:mention "@Eval"})

;; => "Hello, eval!"

;; blocks

(tpl/render

"Hello, fellow {% if-matches #\"mars\" planet %}robots{% else %}humans{% end-if-matches %}!"

{:planet "mars"})

;; => "Hello, fellow robots!"We won't be covering all these features here, but just to get an idea of the bigger picture.

Let's dive in!

lexer

As is a common when implementing a custom language, at the lowest level there's a so-called lexer (or tokenizer) that, in our case, will turn a provided template into separate tokens based on the syntax of the language.

For example:

"Hello!"

;; becomes

[{:v "Hello!",

:t :text,

:start 0}]

;; {{,,,}} is called a variable

"Hello, {{planet}}"

;; becomes

[{:v "Hello, ",

:t :text,

:start 0}

{:v "{{",

:t :variable-start,

:start 7}

{:v "planet",

:t :symbol,

:start 9}

{:v "}}",

:t :variable-end,

:start 15}]As you can see from the examples, a lexer is pretty low-level: its task is just to recognise e.g. when :text ends and a variable starts, and what characters are valid for tokens of type :symbol etc.

A lexer might not be part of your standard toolkit but it can be a very handy tool to make sense of strings when regular expressions won't suffice or are simply overkill.

As Clojurists we prefer simple over easy and I think a lexer is a nice example of something that's simple (as in: straight forward). And will be easy once you know how to write one.

Standard lexing and parsing techniques are so easy to write, so general, and so adaptable there's no reason to use regular expressions. They also result in much faster, safer, and compact implementations. – Rob Pike

Let's start by introducing the main ingredients:

- the lexer context

- lex-functions

- a main-loop

Let's look at them one by one.

lexer context

Smart data structures and dumb code works a lot better than the other way around. – Eric S. Raymond, The Cathedral and The Bazaar

All the state needed for the lexing process will be captured in a map that represents the 'lexing context' (or simply 'lexer' in the code). It contains the input-string, the current position and the emitted tokens among other things:

{:input "Hello, {{name}}!"

:tokens [] ;; all emitted tokens, the final result

:start 0 ;; the position in the input since we last emitted

:pos 0 ;; the position in the input that we advance by 'reading'

:state #'lex-text} ;; the next lex-function to be appliedThe following schematic shows how input, start and pos relate to each other:

;; input: "foo bar baz"

;; start^ ^posEmitting a token given this lexer-state would emit something like {:v " bar" :start 3 :t :some-type}. start will thereafter be set to the same value as pos, ready to read the next token.

lex-* functions

Next ingredient: lex-functions. These functions know how to read a specific part of the input and emit tokens as a result. E.g. lex-text knows how to handle text and emit text-tokens, lex-inside-variable knows how to handle anything following {{ up til }} etc.

They all follow a common pattern in Clojure: take the state of 'the world' (i.e. the lexer context) and yield a new version of it - there's no global or mutable state.

Finally, they are responsible to provide the right value for :state to communicate to the main-loop what lex-function to apply next.

main-loop

The main-loop drives it all: as long as lex-functions return a lexer with a state, the main-loop will keep applying the corresponding lex-function:

(defn run [lexer]

(loop [{lex-fn :state :as lexer} lexer] ;; 1.

(prn lexer) ;; 2.

(if-not lex-fn

lexer

(recur (lex-fn lexer))))) ;; 3.Some notes:

loophas a vector with 2 elements. The right hand side is the initial value to kickstart the loop, i.e. the lexer that was passed in.

The left hand side (i.e.{lex-fn :state :as lexer}) is 'extracting' anything from the lexer that we need in the coming iteration: i.e. the value for:stateis bound tolex-fnandlexerwill be bound to the (updated) lexer-map.- For debugging purposes we'll print the state of the lexer at the beginning of an iteration.

- Reading this inside-out: pass this iteration's

lexerto thelex-fnand pass that result (i.e. the new lexer) torecur.recurjumps back toloop, which extractslex-fnfrom the new lexer and starts the new iteration.

As the main-loop is the place where all state-transitions occur, it's ideal to put code to inspect the lexer during development (or have specific checks on the shape and contents of the lexer). It also helps keeping the lex-functions simpler: e.g. instead of lex-text calling the next lex-function, it yields its result as data. This way it's more convenient to develop and test lex-functions in isolation.

Besides these main ingredients, there's a bunch of (dumb) helper-functions which we'll see shortly. Let's dive in the first lex-function.

lex-text

The lex-text function will be the entry-point and demonstrates clearly what a typical read-function looks like.

Let's describe what it should do. As is often helpful when using potentially endless loops: let's start with the stop conditions:

;; should read "Hello, " of input "Hello, {{planet}}!"

(defn lex-text [lexer]

;; if: end-of-string? or "{{" upcoming?

;; then: emit a text-token and set a new :state

;; else: read a character

)To make the code of any of the lex-functions more descriptive, we can turn the 'verbs' from the description above into helper-functions. Just like the lex-functions, these all take a lexer as an argument and yield a new lexer (unless stated otherwise):

read-char- increment

pos.

- increment

upcoming?- yields true if what is in front of

posequals the string we pass it.

- yields true if what is in front of

emit-token- adds a new token to

tokenswithvbeing a substring ofinput, e.g.{:v "hello" :t :text :start 0}.

- adds a new token to

eos?- yields true if all of

inputis read.

- yields true if all of

As these helpers are pretty straightforward we won't go into their implementation. Though I think it will be nice to turn this into a 'REPL-recipe'. Subscribe to be notified 🔔

The implementation then becomes:

(defn lex-text [lexer]

;; (cond

;; if1? then1,

;; if2? then2, ,,,)

(cond ;; 1.

(eos? lexer) (-> lexer ;; 2.

(emit-token , :text)

(dissoc , :state)) ;; 3.

;; TBD: "{{" upcoming

:else (read-char lexer)))This shows the first stop-condition, end-of-string, along with the happy path in the else-branch.

In case of eos? we need to do some administration to ensure whatever is read up til that point is emitted as a text-token. Removing state then ensures the main loop will halt.

The happy path is to simply read the next character and keep state so we'll be back here in the next iteration.

Some code details:

condis like a switch statement where the first truthy result on the left hand side will result in evaluating the corresponding right hand side. The keyword:elseis truthy so reading a char is the fallback in this example.->is Clojure's thread-first macro. The way to read it: the expression of a line is inserted in the line below it (at the comma), which is then inserted in the line below it etc. So instead of writing e.g.(/ (+ (* 1 2) 3) 5)(which is hard to read from the inside-out), we can write(-> 1 (* 2) (+ 3) (/ 5))which clearly shows the pipeline of transformations. As most of our functions take alexeras the first argument and return a new lexer, we'll be using it a lot!- end-of-string should stop the main-loop - removing

:stateaccomplishes this.

As our lex-text function is pure, it's easy to give it a spin:

;; to apply once

(lex-text {:input "hello" :start 0 :pos 0})

;; or run til the end

(run {:input "hello" :start 0 :pos 0 :state #'lex-text})

;; =>

{:input "hello",

:start 5,

:pos 5,

:state nil,

:tokens [{:start 0, :t "text", :v "hello"}]}That worked! So how about reading a variable, e.g. {{greeting}}?

Let's sketch what we actually need:

- read "{{"

- read 0 or 1 symbol

- read "}}"

- continue lexing text

In order to keep the lex-functions straight forward (i.e. prevent very specific and error-prone ifs in cond), we'll split this up in several functions, each one of them Not Doing Much™️:

(defn lex-variable-start [lexer]

;; read&emit "{{"

;; we *know* that :start is pointing to the first "{"

;; (or we wouldn't be in this state).

;; finally: set state to #'lex-variable-inside

)

(defn lex-variable-inside [lexer]

;; should read&emit "greeting" of input "greeting}} World"

;; if: "}}" upcoming? Then read&emit "}}" and back to #'lex-text

;; else: delegate to #'lex-symbol which

;; should loop back here.

)

(defn lex-symbol [lext]

;; keep reading while the next character is one of [a-z]

;; finally: emit symbol-token and return to lex-variable-inside

)

(defn lex-variable-end [lexer],,,) ;; like lex-variable-startThis pseudo code shows that we're actually missing a way to 'get back' to a previous lex-function. Clearly we don't want to hardcode a parent lex-function in for example lex-symbol.

For this purpose we can easily extend the lexer context to contain a stack, i.e. a LIFO queue to store parent lex-functions. pop-ing this stack would yield the last lex-function push-ed to it.

Two new helper-functions would support this:

assoc-state- set a value for

:state.(assoc-state :return)will use the state currently at the top of the return-stack.

- set a value for

push-return- push a lex-function to the return-stack.

(push-return :current)will use the currentstate.

- push a lex-function to the return-stack.

Let's simulate how we'd transition from lex-text -> lex-variable-inside -> lex-text -> done using these helpers:

;; starting in lex-text

(-> {:state #'lex-text}

;; push current state to return-stack

(push-return :current)

;; switch lex-function (ignoring lex-variable-start)

(assoc-state #'lex-variable-inside)

;; then in lex-variable-inside:

;; pop the return-stack: state becomes #'lex-text

(assoc-state :return)

;; then in lex-text:

;; pop the (empty!) return-stack: state becomes nil

(assoc-state :return))This is a nice example of what Clojure's data-driven approach typically looks like: data is transformed by chaining pure functions that each essentially take maps and yield maps.

As there's not a strict concept of the lexer being passed around, it's easy to iteratively develop these individual functions in isolation (especially using REPL-driven development).

Let's see these helper-functions in action in lex-variable-inside:

(defn lex-variable-inside [lexer]

(cond

(coming-up? lexer "}}") (-> lexer

(read-char 2)

(emit-token :variable-end)

(assoc-state :return)) ;; 1.

:else (-> lexer

(push-return :current) ;; 2.

(assoc-state #'lex-symbol))))At 1. we need to go back 'up' and thus do (assoc-state lexer :return), setting state to whatever is on top of the return-stack. While at 2., right before we go 'deeper' (by deferring to #'lex-symbol), we push #'lex-variable-inside on the return-stack: (push-return lexer :current).

At this point, something like "hello, {{foo}}" should be tokenized correctly. But what happens for input like "hello, {{foo", or "hello, {{ foo }}"? Let's look at errors.

errors

Our tokenizer will currently simply stop whenever it runs into something it doesn't expect, e.g. the first whitespace in "{{ foo }}". It would obviously be nicer to get an error like Expected a symbol at position 2.

This touches on what category of errors a lexer should report on (and what can be deferred, i.e. to a parser that processes the tokens). There's obviously only so much that a lexer can check for: e.g. it knows how to detect when a symbol starts and ends but to determine if it's really a symbol, it should be parsed. Same goes for determining where a symbol is pointing to, e.g. is uppercase a known filter in "{{greeting|uppercase}}".

What the lexer does know though (or: what it's all about), is what data to expect given the state it's in. It makes sense then to report an error when it can't continue. It makes the lex-function obviously a bit bulkier, but the code also becomes more self-documenting this way:

(defn lex-variable-inside [lexer]

(cond

(coming-up? lexer "}}") (-> lexer

(assoc-state #'lex-variable-end))

(coming-up? lexer symbol-chars) (-> lexer ;; 1.

(push-return :current)

(assoc-state #'lex-symbol))

;; 2. unexpected

(eos? lexer) (-> lexer

(error "Unclosed variable: expected \"}}\""))

:else (let [symbol-emitted? (-> lexer

last-emitted

:t

(= :symbol))]

(cond

symbol-emitted? (error lexer "Expected \"}}\".")

:else (error lexer "Expected a symbol")))))Let's go over it:

In order to determine if a symbol is coming up at the beginning of line 5, coming-up? is now a tiny bit smarter: besides accepting a string as second argument, it also accepts a predicate function that will be applied to the upcoming character in order to determine if something is coming up.

Clojure's sets doubling as functions, keeps the code compact and descriptive:

;; next character ∈ a-z?

(let [a-z? #{\a \b \c ,,,, \z}]

(a-z? (peek-char lexer)) ;; => nil or said characterIn 2. all unexpected situations are handled: end-of-string, no symbol and no "}}" when a symbol was already emitted (using a new helper function last-emitted). Finally, the error-function will, fully in line with our data-driven approach, simply assoc an error-message to the lexer-map and remove the state to halt the main-loop. The tokenizer's caller can then format the error and inform the user what (and where) things went wrong:

Expected a symbol:

Hello, {{1}}!

^Conclusion

Hopefully this article gave you some insight in the benefits of a lexer, how to approach writing one and what it would look like in a functional language.

In the next article we'll look at how to use the tokens to implement the semantics of our templating language, and eventually render a template.

Tags: #Clojure